爬取斗图网案例

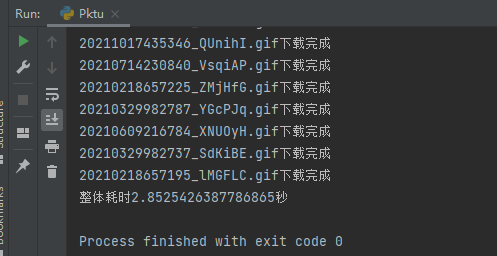

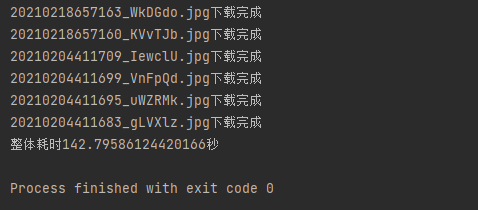

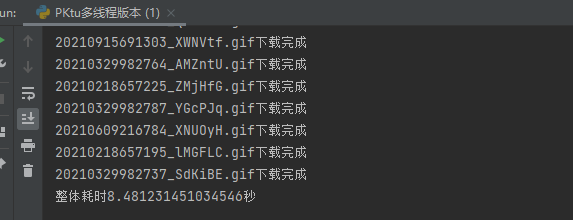

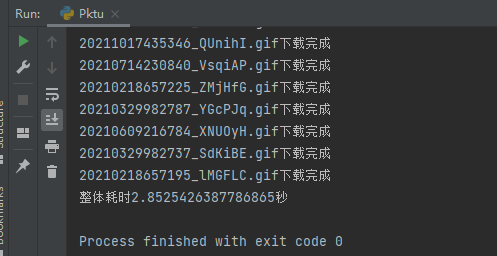

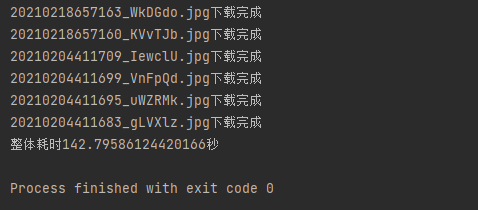

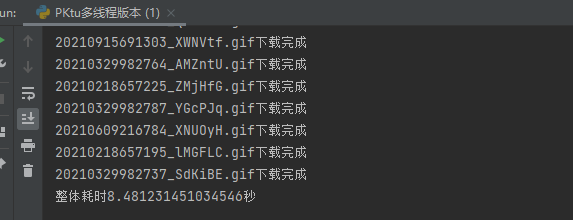

本篇以爬取斗图网为案例,分别有单线程爬取斗图网(耗时142秒),多线程爬取斗图网(耗时8秒),以及异步爬取斗图网(不足3秒)。

单线程爬取斗图王

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

| import requests

from lxml import etree

import os

import time

def get_img_urls():

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.71 Mobile Safari/537.36'

}

res = requests.get("https://www.pkdoutu.com/photo/list/", headers=headers, verify=False)

selector = etree.HTML(res.text)

img_urls = selector.xpath('//*[@id="pic-detail"]/div/div[2]/div[2]/ul/li/div/div/a/img[@data-backup]/@data-backup')

return img_urls

def download_img(url):

res = requests.get(url)

img_name = os.path.basename(url)

path = os.path.join("imgs", img_name)

if not os.path.exists("imgs"):

os.mkdir("imgs")

with open(path, "wb") as f:

for i in res.iter_content():

f.write(i)

print(f"{img_name}下载完成")

start = time.time()

img_urls = get_img_urls()

for img_url in img_urls:

download_img(img_url)

print(f"整体耗时{time.time() - start}秒")

|

会有一个警告,这个警告对于后面的代码逻辑没有影响

requests.get(“https://www.pkdoutu.com/photo/list/", headers=headers, verify=False)

这是因为verify关键字设置了False,参数这是因为我们没有配值数字证书,会提示通信不安全

1

2

| import urllib3

urllib3.disable_warnings()

|

多线程版本

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

| import requests

from lxml import etree

import os

import time

import threading

def get_img_urls():

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.71 Mobile Safari/537.36'

}

res = requests.get("https://www.pkdoutu.com/photo/list/", headers=headers)

selector = etree.HTML(res.text)

img_urls = selector.xpath('//*[@id="pic-detail"]/div/div[2]/div[2]/ul/li/div/div/a/img[@data-backup]/@data-backup')

return img_urls

def download_img(url):

res = requests.get(url)

img_name = os.path.basename(url)

path = os.path.join("imgs", img_name)

if not os.path.exists("imgs"):

os.mkdir("imgs")

with open(path, "wb") as f:

for i in res.iter_content():

f.write(i)

print(f"{img_name}下载完成")

start = time.time()

img_urls = get_img_urls()

t_list = []

for img_url in img_urls:

t = threading.Thread(target=download_img, args=(img_url,))

t.start()

t_list.append(t)

for t in t_list:

t.join()

print(f"整体耗时{time.time() - start}秒")

|

异步版本

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

| import requests

from lxml import etree

import os

import time

import asyncio

import aiohttp

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.71 Mobile Safari/537.36'

}

async def get_img_urls():

async with aiohttp.ClientSession() as session:

async with session.get("https://www.pkdoutu.com/photo/list/", headers=headers, ssl=False) as response:

selector = etree.HTML(await response.text())

img_urls = selector.xpath('//*[@id="pic-detail"]/div/div[2]/div[2]/ul/li/div/div/a/img[@data-backup]/@data-backup')

return img_urls

async def download_img(url):

async with aiohttp.ClientSession() as session:

async with session.get(url, ssl=False) as response:

img_name = os.path.basename(url)

path = os.path.join("imgs", img_name)

if not os.path.exists("imgs"):

os.mkdir("imgs")

with open(path, "wb") as f:

f.write(await response.content.read())

print(f"{img_name}下载完成")

async def main():

img_urls = await get_img_urls()

tasks = [asyncio.create_task(download_img(url)) for url in img_urls]

await asyncio.wait(tasks)

start = time.time()

asyncio.run(main())

print(f"整体耗时{time.time() - start}秒")

|